NIST selects HQC as the 5th Post-Quantum Algorithm: What you need to Know?

The Evolution of Post-Quantum Cryptography: NIST’s Fifth Algorithm Selection and Its Impact

Introduction

Quantum computing is no longer just a theoretical curiosity—it is advancing towards real-world applications. With these advances comes a major challenge: how do we keep our data secure when today’s encryption methods become obsolete?

Recognising this urgent need, the National Institute of Standards and Technology (NIST) has been working to standardise cryptographic algorithms that can withstand quantum threats. On March 11, 2025, NIST made a significant announcement: the selection of Hamming Quasi-Cyclic (HQC) as the fifth standardised post-quantum encryption algorithm. This code-based algorithm serves as a backup to ML-KEM (Module-Lattice Key Encapsulation Mechanism), ensuring that the cryptographic landscape remains diverse and resilient.

Business and Regulatory Implications

Why This Matters for Organisations

For businesses, governments, and security leaders, the post-quantum transition is not just an IT issue—it is a strategic necessity. The ability of quantum computers to break traditional encryption is not a question of if, but when. Organisations that fail to prepare may find themselves vulnerable to security breaches, regulatory non-compliance, and operational disruptions.

Key Deadlines & Compliance Risks

- By 2030: NIST will deprecate all 112-bit security algorithms, requiring organisations to transition to quantum-resistant encryption.

- By 2035: Quantum-vulnerable cryptography will be disallowed, meaning organisations must adopt new standards or risk compliance failures.

- Government Mandates: The Cybersecurity and Infrastructure Security Agency (CISA) has already issued Binding Operational Directive 23-02, requiring federal vendors to begin their post-quantum transition.

- EU Regulations: The European Union is advocating for algorithm agility, urging businesses to integrate multiple cryptographic methods to future-proof their security.

How Organisations Should Respond

To stay ahead of these changes, organisations should:

- Implement Hybrid Cryptography: Combining classical and post-quantum encryption ensures a smooth transition without immediate overhauls.

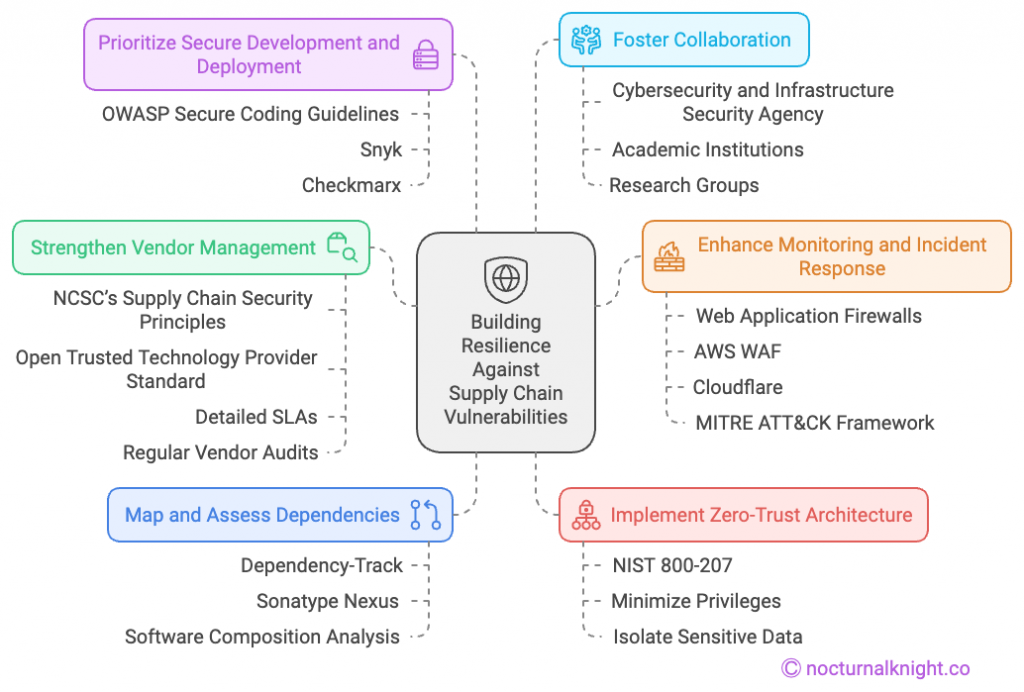

- Monitor Supply Chain Dependencies: Ensuring Software Bill-of-Materials (SBOM) compliance can help track cryptographic vulnerabilities.

- Leverage Automated Tooling: NIST-recommended tools like Sigstore can assist in managing cryptographic transitions.

- Pilot Test Quantum-Resistant Solutions: By 2026, organisations should begin hybrid ML-KEM/HQC deployments to assess performance and scalability.

Technical Breakdown: Understanding HQC and Its Role

Background: The NIST PQC Standardisation Initiative

Since 2016, NIST has been leading the effort to standardise post-quantum cryptography. The urgency stems from the fact that Shor’s algorithm, when executed on a sufficiently powerful quantum computer, can break RSA, ECC, and Diffie-Hellman encryption—the very foundations of today’s secure communications.

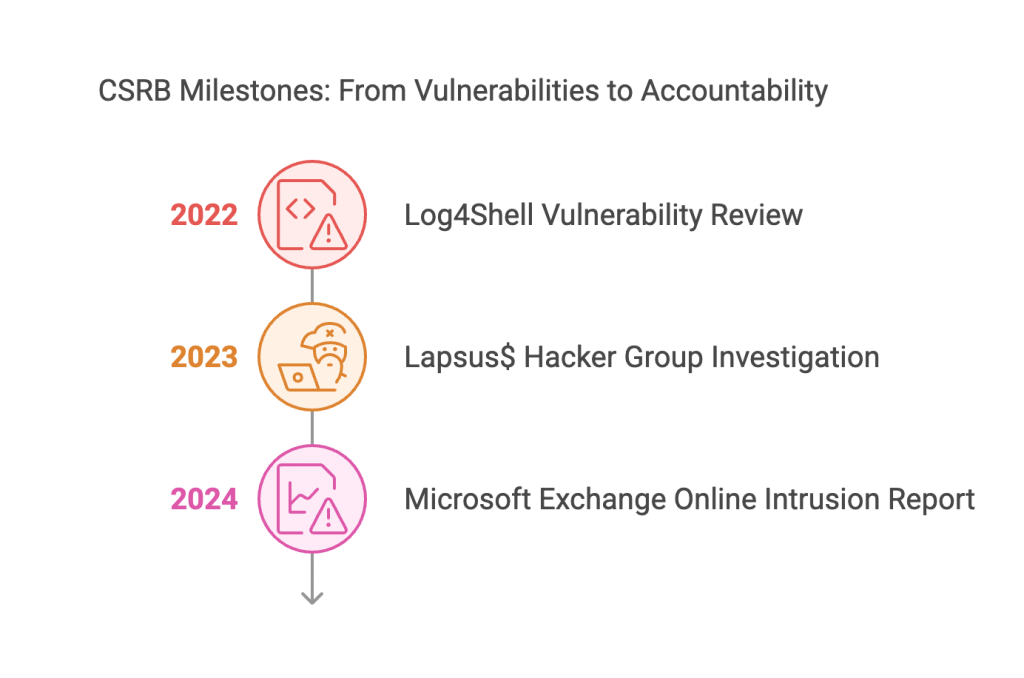

How We Got Here: NIST’s Selection Process

- August 2024: NIST finalised its first three PQC standards:

- FIPS 203 – ML-KEM (for key exchange)

- FIPS 204 – ML-DSA (for digital signatures)

- FIPS 205 – SLH-DSA (for stateless hash-based signatures)

- March 2025: NIST added HQC as a code-based backup to ML-KEM, ensuring an alternative in case lattice-based approaches face unforeseen vulnerabilities.

What Makes HQC Different?

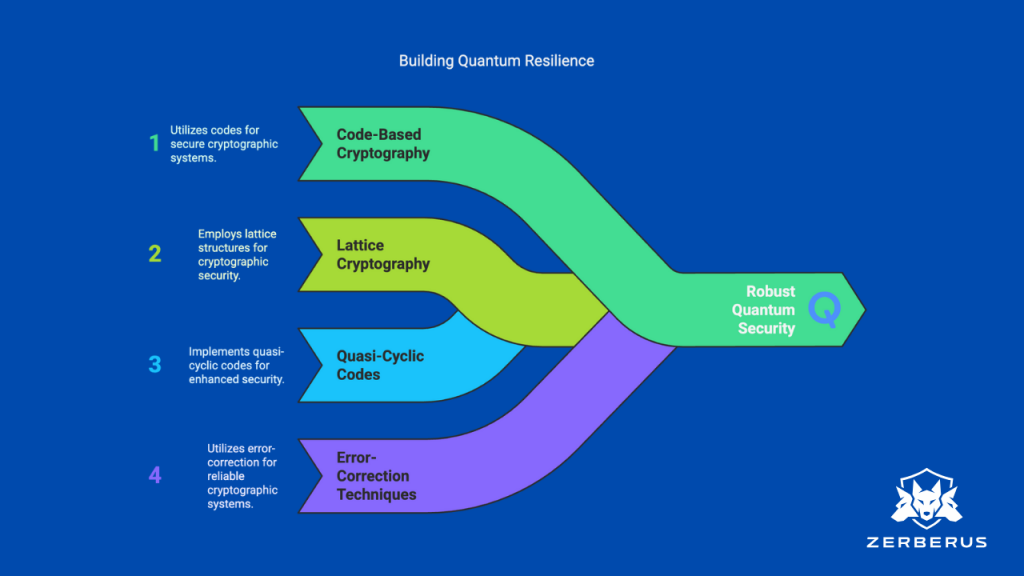

HQC offers a code-based alternative to lattice cryptography, relying on quasi-cyclic codes and error-correction techniques.

- Security Strength: HQC is based on the hardness of decoding random quasi-cyclic codes (QCSD problem). Its IND-CCA2 security is proven in the quantum random oracle model.

- Efficient Performance:

- HQC offers a key size of ~3,000 bits, significantly smaller than McEliece’s ~1MB keys.

- It enables fast decryption while maintaining zero decryption failures in rank-metric implementations.

- A Safety Net for Cryptographic Diversity: By introducing code-based cryptography, HQC provides a backup if lattice-based schemes, such as ML-KEM, prove weaker than expected.

Challenges & Implementation Considerations

Cryptographic Diversity & Risk Mitigation

- Systemic Risk Reduction: A major breakthrough against lattice-based schemes would not compromise code-based HQC, ensuring resilience.

- Regulatory Alignment: Many global cybersecurity frameworks now advocate for algorithmic agility, aligning with HQC’s role.

Trade-offs for Enterprises

- Larger Key Sizes: HQC keys (~3KB) are larger than ML-KEM keys (~1.6KB), requiring more storage and processing power.

- Legacy Systems: Organisations must modernise their infrastructure to support code-based cryptography.

- Upskilling & Training: Engineers will need expertise in error-correcting codes, a different domain from lattice cryptography.

Looking Ahead: Preparing for the Post-Quantum Future

Practical Next Steps for Organisations

- Conduct a Cryptographic Inventory: Use NIST’s PQC Transition Report to assess vulnerabilities in existing encryption methods.

- Engage with Security Communities: Industry groups like the PKI Consortium and NIST Working Groups provide guidance on best practices.

- Monitor Additional Algorithm Standardisation: Algorithms such as BIKE and Classic McEliece may be added in future updates.

Final Thoughts

NIST’s selection of HQC is more than just an academic decision—it is a reminder that cybersecurity is evolving, and businesses must evolve with it. The transition to post-quantum cryptography is not a last-minute compliance checkbox but a fundamental shift in how organisations secure their most sensitive data. Preparing now will not only ensure regulatory compliance but also protect against future cyber threats.

References & Further Reading

- National Institute of Standards and Technology (2025) ‘NIST Selects HQC as Fifth Algorithm for Post-Quantum Encryption’, NIST Newsroom, [online]. Available at: https://www.nist.gov/news-events/news/2025/03/nist-selects-hqc-fifth-algorithm-post-quantum-encryption (Accessed: 18 March 2025).

- SecurityWeek (2025) ‘NIST Announces HQC as Fifth Standardised Post-Quantum Algorithm’, SecurityWeek, [online]. Available at: https://www.securityweek.com/nist-announces-hqc-as-fifth-standardized-post-quantum-algorithm/ (Accessed: 18 March 2025).

- MeriTalk (2025) ‘NIST Adds HQC as Fifth Algorithm for Post-Quantum Encryption’, MeriTalk, [online]. Available at: https://www.meritalk.com/articles/nist-adds-hqc-as-fifth-algorithm-for-post-quantum-encryption/ (Accessed: 18 March 2025).

- Nocturnal Knight (2025) ‘Post-Quantum Cryptography Articles’, Nocturnal Knight, [online]. Available at: https://nocturnalknight.co/tag/post-quantum-cryptography/ (Accessed: 18 March 2025).

- Nocturnal Knight (2025) ‘How Will China’s Quantum Advances Change Internet Security?’, Nocturnal Knight, [online]. Available at: https://nocturnalknight.co/how-will-chinas-quantum-advances-change-internet-security/ (Accessed: 18 March 2025).

- PKI Consortium (2025) ‘NIST PQC Update’, PKI Consortium, [online].

- Gaborit, P. (2024) ‘HQC: IND-CCA2 Code-Based Encryption’, Cryptographic Journal, [online].

- OODA Loop (2025) ‘NIST Selects HQC as Backup Algorithm’, OODA Loop, [online].