WazirX Security Breach, What You Need to Know

Major Security Breach at WazirX: Key Details and How to Protect Yourself

In a shocking turn of events, WazirX, one of India’s premier cryptocurrency exchanges, has fallen victim to a massive security breach. The incident has not only raised alarm bells in the crypto community but also highlighted the pressing need for stringent security measures. Here’s a comprehensive look at the breach, its implications, and how you can safeguard your digital assets.

The WazirX Security Breach: What Happened?

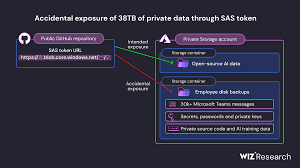

In July 2024, WazirX confirmed a major security breach that resulted in hackers siphoning off approximately $10 million worth of various cryptocurrencies from user accounts. According to The Hacker News, the attackers exploited vulnerabilities in the exchange’s infrastructure, gaining unauthorized access to user data and funds. This incident is part of a broader trend of increasing cyberattacks on cryptocurrency platforms.

Additionally, Business Standard reported a suspicious transfer of $230 million just before the breach was discovered, raising further concerns about the internal security measures and the potential for insider involvement.

How Did the Hack Happen?

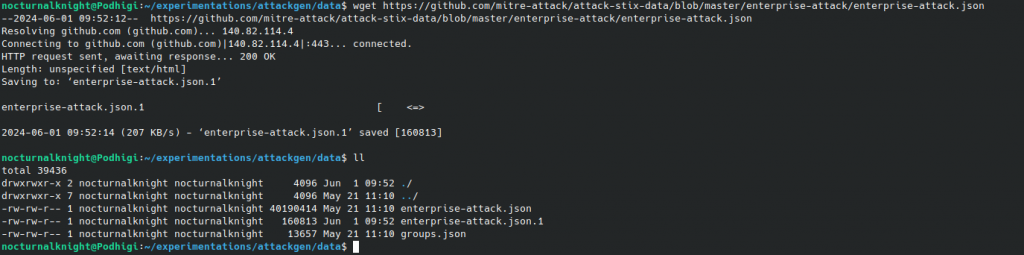

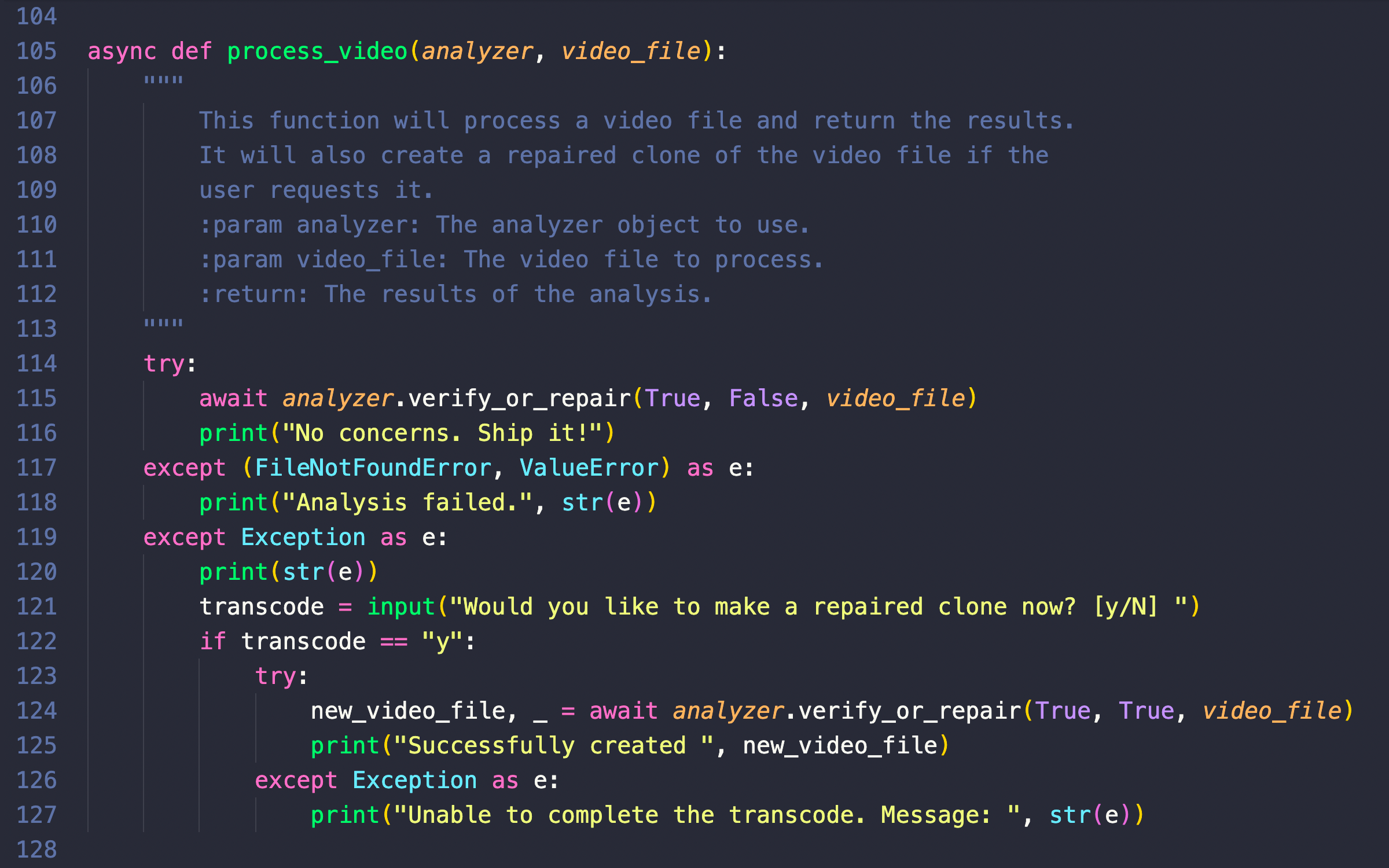

According to the preliminary report by WazirX, the breach involved a complex and coordinated attack on their multi-signature wallet infrastructure:

- Tampering with Transaction Ledger: The attackers managed to manipulate the transaction ledger, enabling unauthorized transactions. This tampering allowed fraudulent withdrawals that initially went unnoticed.

- Manipulating the User Interface (UI): The hackers exploited vulnerabilities in the user interface to conceal their activities. This manipulation misled both users and administrators by displaying incorrect balances and transaction histories.

- Collaboration with Liminal: WazirX worked closely with cybersecurity firm Liminal to investigate the breach. Liminal’s expertise was crucial in identifying the vulnerabilities and understanding the full scope of the attack.

The preliminary investigation indicated that there were no signs of a phishing attack or insider involvement. Instead, the breach was due to external manipulation of the transaction system and user interface.

Immediate Actions Taken by WazirX

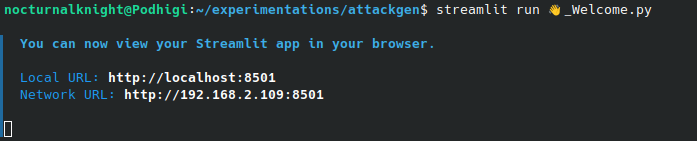

Upon detecting the breach, WazirX swiftly implemented several measures to mitigate the damage:

- Containment: Affected systems were isolated to prevent further unauthorized access.

- User Notification: Users were promptly informed about the breach with advisories to change passwords and enable two-factor authentication (2FA).

- Investigation: WazirX is collaborating with top cybersecurity firms and law enforcement to investigate the breach and identify the culprits.

- Security Enhancements: Additional security measures, including enhanced encryption and stricter access controls, have been put in place.

According to Livemint, WazirX is working closely with global law enforcement agencies to recover the stolen assets and bring the perpetrators to justice. This breach follows a series of high-profile crypto scams and exchange failures, including the collapses of FTX and QuadrigaCX, which have collectively led to billions in losses for investors worldwide.

Implications for WazirX Users

The WazirX security breach has several critical implications:

- Personal Data Exposure: Users’ personal information, including names, email addresses, and phone numbers, may be at risk.

- Financial Loss: The breach has led to significant financial losses, although efforts are underway to recover the stolen funds.

- Trust Issues: Such incidents can severely undermine user trust in cryptocurrency exchanges, emphasizing the need for robust security practices.

How to Protect Your Cryptocurrency Assets

In light of the WazirX security breach, here are some essential steps to protect your digital assets:

- Change Your Passwords: Update your WazirX password immediately and avoid using the same password across multiple platforms.

- Enable Two-Factor Authentication (2FA): Adding an extra layer of security can significantly reduce the risk of unauthorized access.

- Monitor Your Accounts: Regularly check your transaction history for any unusual activity and report suspicious transactions immediately.

- Beware of Phishing Attacks: Be cautious of emails or messages requesting personal information. Verify the source before responding.

- Use Hardware Wallets: For significant cryptocurrency holdings, consider using hardware wallets, which offer enhanced security against online threats.

The Future of Cryptocurrency Security

The WazirX breach is a wake-up call for the entire cryptocurrency industry. It underscores the necessity for continuous security upgrades and vigilant monitoring to protect users’ assets and maintain trust. As the industry evolves, exchanges must prioritize security to safeguard their platforms against increasingly sophisticated cyber threats.

Further Reading and References

- The Hacker News: WazirX Cryptocurrency Exchange Loses $10 Million in Security Breach

- Livemint: Crypto Scams Across Globe – Investors WazirX Hack, Security Breach, FTX, Quadriga, and Morris Coin

- Business Standard: WazirX Suffers Security Breach After Suspicious $230 Million Transfer

- Business Today: Indian Crypto Exchange WazirX Reveals Details About Major Security Breach Causing Theft of Over $230 Million

- WazirX Blog: Preliminary Report – Cyber Attack on WazirX Multisig Wallet

Stay informed and vigilant to protect your investments in the ever-evolving world of cryptocurrencies. By taking proactive steps, you can enhance your digital security and navigate the market with confidence.

#WazirX #Cryptocurrency #SecurityBreach #CryptoHacking #BlockchainSecurity #DigitalAssets #CryptoSafety #WazirXHack #CryptocurrencySecurity