How to Disable an Adblocker-blocker or Create an Anti-Adblock Killer!

History & Theory:

Digital Advertisement:

I get it. Ads are a necessary evil in content delivery game. Hell, I have been in the engineering side of content delivery for 10 yrs myself. So, back in the days of #dotcom #bubble, we endured Banner ADs. When the #BigBrother, oops #Google came up, they swept the market clean with their (initially, atleast) non-intrusive text ADs. And people even appreciated the contextual advertisement, just when you were searching for a suspension for your car, you see 4 different ADs for OEM grade replacement suspension, grease monkeys to install them and so on.

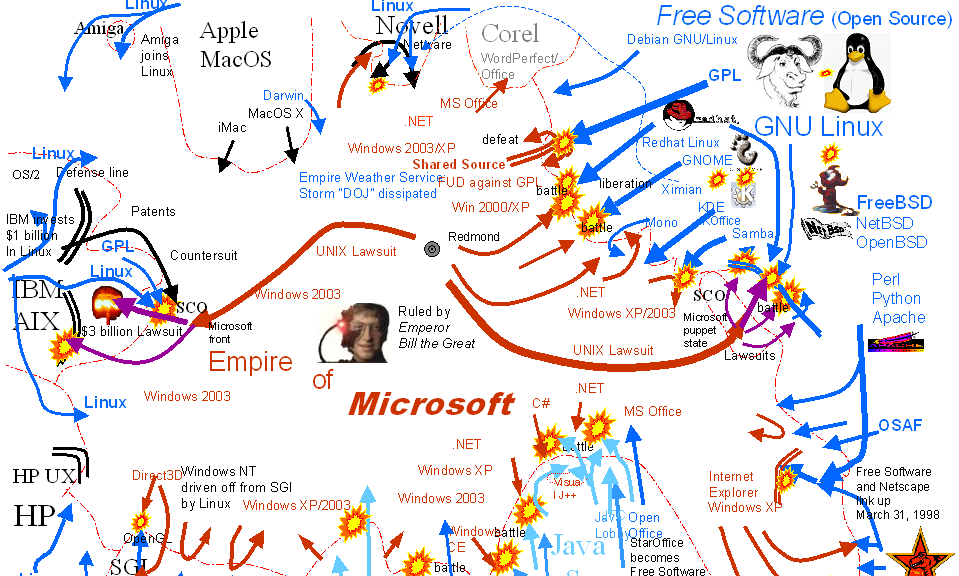

Fast forward 10 yrs and Google is the global powerhouse of advertisement. Google knows what your mothers’ cousin` once removed does like and runs ads tailored to it in no less than 50 websites run by Google and countless other affiliates. The convenience transformed itself into a mild hindrance and a major nuisance in no time. In its core, Google, Microsoft and Yahoo ADs were all based on a relevance relevance engine. I.E. based on the content that is currently served by the publisher (website you’re visiting) they search for the relevant ADs from their database and one that matches and has the target profile matching yours (this is where privacy advocates go crazy) they serve this AD. In its simplest form the process look something like the below diagram.

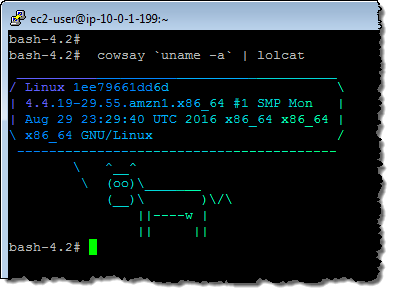

For the inquisitive lot, who want to know the technicality, it looks a lot more complex than this and it is presented below.

Enter ADBlockers:

And soon, people found a way to block the ADs. As seen above, All of these Adverts are programmed to run using great stores of data from the backend. So, when a user visits site a lot happens in the backend and a script is used get the resultant AD piece. Technically inclined people started writing custom scripts that would stop this script which renders the ADs. In no time all the bells and whistles like #blacklist #whitelist #regular-expression support all came in. Once the modern browser came with support for content filtering built-in, it was easy to supplement them with custom lists and scripts to block these ads. And ADBlockers for every device, OS, browsers became available and public knowledge of the same exploded their use in around 2013-2015 period. (see graph below) . So, All seems rosy from here.

ADBlocker-Blocker

Publishers and their representative trade bodies, on the other hand, argue that Internet ads provide revenue to website owners, which enable the website owners to create or otherwise purchase content for the website. Publishers claim that the prevalent use of ad blocking software and devices could adversely affect website owner revenue and thus in turn lower the availability of free content on websites. So, there is no wonder that publishers have begun to block or evict users found to be using #ADBlockers. (A page from my personal experience, I do not remember a time when I did not use ADblock, before Mozilla, I used MyIE (Maxthon) which had this configurable filters). But, off-lately the publishers have become more aggressive and have rolled out a slew of their own warriors. AKA ADBlocker-Blocker. Which are nifty little utilities you can embed in your site and traffic from ADB enabled users will be blocked until they disable or whitelist you. Some majors like Economist, Wired and others have announced a novel approach, either you can disable ADB on their site or pay a small fee to see their site without the clutter of advertisements. For the sites that do not offer this feature or If you wish to simply override them, read on.

Practice & Implementation

So, enter Anti-AdBlocker Killer — https://github.com/reek/anti-adblock-killer

It’s simple, really: it tricks sites that use #anti-adblocker technology into thinking you aren’t using an adblocker. The #adblocker-blocker lets you keep your adblocker on when you visit a page that would usually disable it by using a JavaScript file and filter list. This means you can work around bans on adblockers from common news companies, like Forbes, which lock you out when you’re detected.

It works against a number of different technologies used to detect #adblock users, and is likely to be a part of the next #armsrace as publishers work out how to block the #adblockers using #adblocker-blockers. If you’re still reading, I will conclude my narration and give step-by-step instruction on how to enable it and activate.

Step-by-step Instruction to Activate Anti-Adblock Killer

- Step 1 – Get a Script Manager:

Greasemonkey or Scriptish

Greasemonkey or Scriptish Tampermonkey or Native

Tampermonkey or Native Tampermonkey or Violentmonkey

Tampermonkey or Violentmonkey Tampermonkey or NinjaKit

Tampermonkey or NinjaKit Tampermonkey

Tampermonkey

-

- (* After installation, depending on your browser, may require a browser restart for it to effect)

-

- Step 2 – Subscribe to a FilterList

- Step 3 – Get User Scripts

Once this is done, you’re on your way to enjoy AD-Blocker pop-up free browsing.