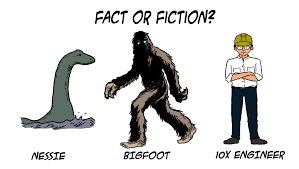

Is the myth of a “10X Developer” Real?

If you’re a software engineer, manager or leader, I am sure you have heard the term ‘10x developer’ used in discussions. It refers to developers who are purportedly 10 times more productive, or capable, than their peers, while it is a hotly contested category. Some refer to it very liberally, others deny that it even exists. In the last 40+ years, the ‘10X developer` has become a ‘Loch Ness` of the tech world, fueled by the hype associated with Silicon Valley.

I’m not about to delude myself into thinking that writing a blog on it to pass my verdict will put these theories to rest, but the question has gained enough traction that it deserves a little articulation.

Do 10x developers really exist, and if so, how would we distinguish them?

Framing the Issue

All of us can acknowledge that the range of skills in most human activities can be extensive. A marathon runner can cover roughly 10 times the distance that an untrained person could, while a professional chef can cook a 5-course meal in 1/5th the time it takes an average person to do a 2-course meal.

Coding, which is a hugely complex field unencumbered by physical limitations, should naturally show differences in the skill that vary by orders of magnitude. Thus, if by 10x developer we simply mean a person whose skill level is in a different league compared to someone else, then clearly they exist.

How could anyone argue otherwise?

Here’s the rub though, in the data-driven and lexically precise world of modern tech, that’s not what 10x developer means. Instead, a 10x developer is supposed to be someone who genuinely outperforms others by 10 times or more on some quantifiable scale. That ‘quantifiable scale’ is where the problems start.

Where did the term actually come from? Enter Coding War Games.

Coding War Games

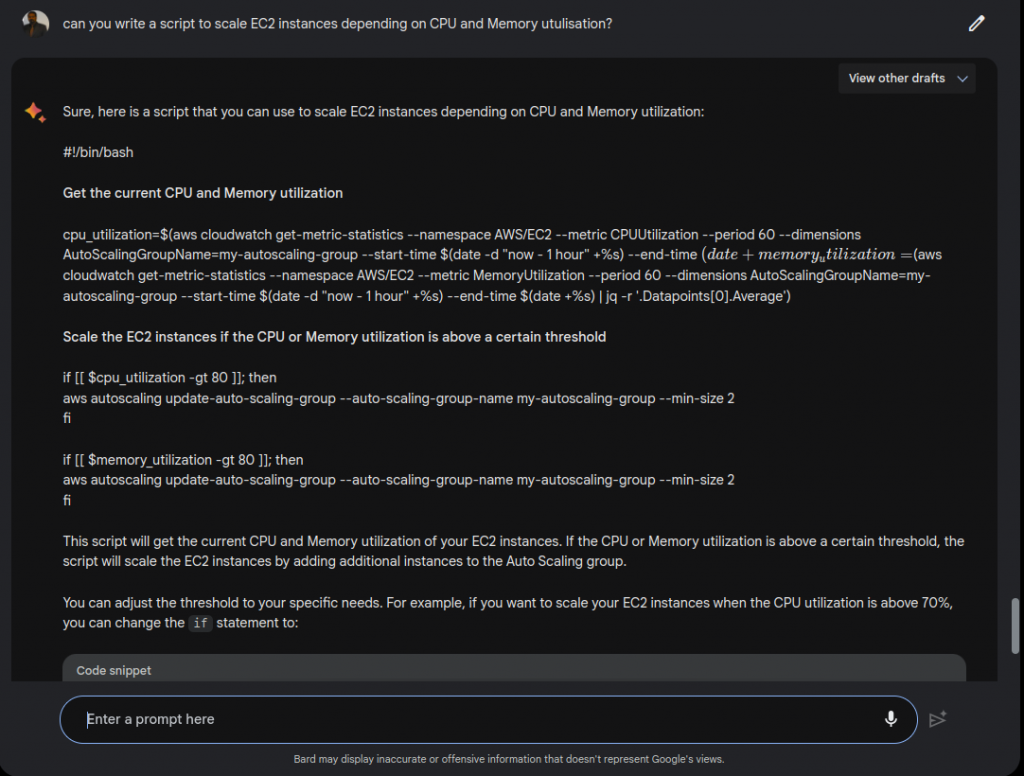

Tom DeMarco and Tim Lister have conducted the “Coding War Games” since 1977. This is a public productivity survey in which teams of software implementors from different organizations compete to complete a series of benchmarks in minimal time with minimal defects. They’ve had over 600 developers participate. Its results are publicly available and is very informative, to say the least. Jeff Lester published a wonderful piece on the origins of the 10X developer here.

The top findings from these are,

1, Get your working environment Right

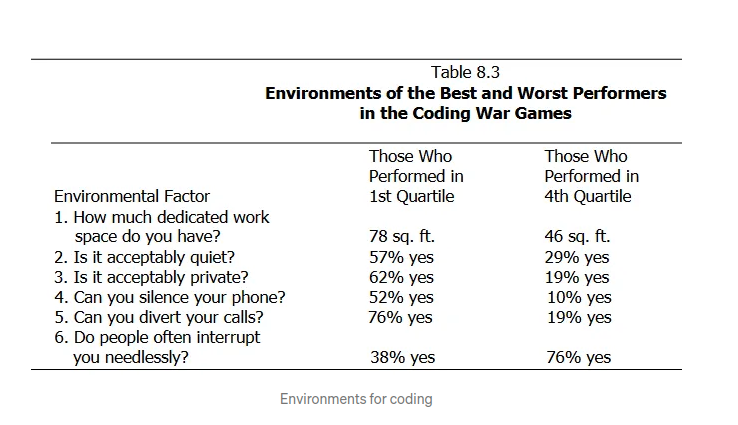

The overriding observation from this study is that quiet, private, dedicated working space with fewer interruptions led to groups that performed significantly better.

2, Remove the Net Negative Producing Programmer

Some developers are “net negative producing programmers” (NNPP), that is they produce so many defects that removing them from the team increases productivity. This is the opposite of a 10X developer, these people are the ones that make the team productivity go from bad to worse.

The Problem with Measuring ‘Skill’

Even where skill can vary wildly, differences will not necessarily be quantifiable. A talented artist may know how to create a painting that teleports you to a whole other world compared to an average artiste who can transport you to the scene.

But the question is, can you attach numbers to that painting’s beauty?

The work of a developer isn’t nearly as abstract, but not all of it can be reduced to metrics either, and definitely not the programming skill itself.

A less glamorous approach may be to judge a 10x dev not in terms of skill but in terms of productivity. Someone who can write 500 lines of code when it takes others to write 50 would then fall in that category.

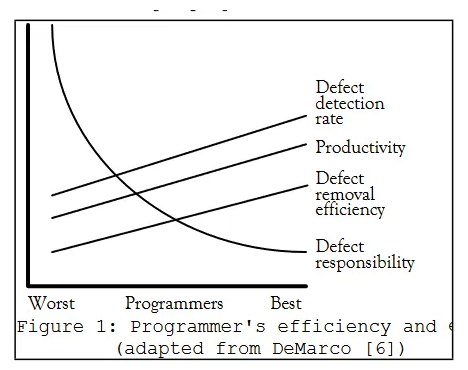

If you know anything about programming, however, you’ve probably already spotted the problem with this line of thinking. Longer code isn’t necessarily more efficient, and for most people there tends to be a positive correlation between how quickly one works and how many bugs one creates.

This is not to say that programmers can’t produce bug-free code much faster than their peers. Where this statement proves fallacious though, is in trying to peg that difference to a single metric. There are myriad factors at play that will affect a developer’s productivity outside of their skill, including their team and the environment they find themselves. In fact, depending on the situation, the 10x tag may be inaccurate because a developer could be programming well over 10 times as much as another and still produce 1/10th of the ‘Outcomes’!

What should be our conclusion? There can be no doubt that the field of programming has its own Mozarts and Vincent Van Goghs, and few would object if these people were described as being ‘orders of magnitude’ better than the rest. But it is important to recognize that this is only a figure of speech, and not something meant to be used according to its precise quantitative meaning.

I can’t presume to speak for the tech industry as a whole, but I for one have noticed a worrying tendency to read the expression ‘10x developer’ literally.

Ultimately this does more harm than good, as it spreads the myth that there is some universal metric whereby every programmer’s value can always be quantified.

Important qualities like creativity, client focus and teamwork are entirely omitted in this way of thinking, which is why my final suggestion is to stop worrying about lofty 10x developers and whether you are, aren’t, or may or not become one.

Simply focus on being the best developer you can be. That will always be enough.

References & Further Reading

Origin of a 10X developer

https://medium.com/ingeniouslysimple/the-origins-of-the-10x-developer-2e0177ecef60

https://gwern.net/doc/cs/algorithm/2001-demarco-peopleware-whymeasureperformance.pdf

https://news.ycombinator.com/item?id=22349531