Defence Tech at Risk: Palantir, Anduril, and Govini in the New AI Arms Race

A Chink in Palantir and Anduril’s Armour? Govini and Others Are Unsheathing the Sword

When Silicon Valley Code Marches to War

A U.S. Army Chinook rises over Gyeonggi Province, carrying not only soldiers and equipment but streams of battlefield telemetry, encrypted packets of sight, sound and position. Below, sensors link to vehicles, commanders to drones, decisions to data. Yet a recent Army memo reveals a darker subtext: the very network binding these forces together has been declared “very high risk.”

The battlefield is now a software construct. And the architects of that code are not defence primes from the industrial era but Silicon Valley firms, Anduril and Palantir. For years, they have promised that agility, automation and machine intelligence could redefine combat efficiency. But when an internal memo brands their flagship platform “fundamentally insecure,” the question is no longer about innovation. It is about survival.

Just as the armour shows its first cracks, another company, Govini, crosses $100 million in annual recurring revenue, sharpening its own blade in the same theatre.

When velocity becomes virtue and verification an afterthought, the chink in the armour often starts in the code.

The Field Brief

- A U.S. Army CTO memo calls Anduril–Palantir’s NGC2 communications platform “very high risk.”

- Vulnerabilities: unrestricted access, missing logs, unvetted third-party apps, and hundreds of critical flaws.

- Palantir’s stock drops 7 %; Anduril dismisses findings as outdated.

- Meanwhile, Govini surpasses $100 M ARR with $150 M funding from Bain Capital.

- The new arms race is not hardware; it is assurance.

Silicon Valley’s March on the Pentagon

For over half a century, America’s defence economy was dominated by industrial giants, Lockheed Martin, Boeing, and Northrop Grumman. Their reign was measured in steel, thrust and tonnage. But the twenty-first century introduced a new class of combatant: code.

Palantir began as an analytics engine for intelligence agencies, translating oceans of data into patterns of threat. Anduril followed as the hardware-agnostic platform marrying drones, sensors and AI decision loops into one mesh of command. Both firms embodied the “move fast” ideology of Silicon Valley, speed as a substitute for bureaucracy.

The Pentagon, fatigued by procurement inertia, welcomed the disruption. Billions flowed to agile software vendors promising digital dominance. Yet agility without auditability breeds fragility. And that fragility surfaced in the Army’s own words.

Inside the Memo: The Code Beneath the Uniform

The leaked memo, authored by Army CTO Gabriele Chiulli, outlines fundamental failures in the Next-Generation Command and Control (NGC2) prototype, a joint effort by Anduril, Palantir, Microsoft and others.

“We cannot control who sees what, we cannot see what users are doing, and we cannot verify that the software itself is secure.”

The findings are stark: users at varying clearance levels could access all data; activity logging was absent; several embedded applications had not undergone Army security assessment; one revealed twenty-five high-severity vulnerabilities, while others exceeded two hundred.

Translated into security language, the platform lacks role-based access control, integrity monitoring, and cryptographic segregation of data domains. Strategically, this means command blindness: an adversary breaching one node could move laterally without a trace.

In the lexicon of cyber operations, that is not “high risk.” It is mission failure waiting for confirmation.

Inside the Memo: The Code Beneath the Uniform

The leaked memo, authored by Army CTO Gabriele Chiulli, outlines fundamental failures in the Next-Generation Command and Control (NGC2) prototype — a joint effort by Anduril, Palantir, Microsoft and others.

“We cannot control who sees what, we cannot see what users are doing, and we cannot verify that the software itself is secure.”

-US Army Memo

The findings are stark: users at varying clearance levels could access all data; activity logging was absent; several embedded applications had not undergone Army security assessment; one revealed twenty-five high-severity vulnerabilities, while others exceeded two hundred.

Translated into security language, the platform lacks role-based access control, integrity monitoring, and cryptographic segregation of data domains. Strategically, this means command blindness: an adversary breaching one node could move laterally without trace.

In the lexicon of cyber operations, that is not “high risk.” It is a “mission failure waiting for confirmation”.

The Doctrine of Velocity

Anduril’s rebuttal was swift. The report, they claimed, represented “an outdated snapshot.” Palantir insisted that no vulnerabilities were found within its own platform.

Their responses echo a philosophy as old as the Valley itself: innovation first, audit later. The Army’s integration of Continuous Authority to Operate (cATO) sought to balance agility with accountability, allowing updates to roll out in days rather than months. Yet cATO is only as strong as the telemetry beneath it. Without continuous evidence, continuous authorisation becomes continuous exposure.

This is the paradox of modern defence tech: DevSecOps without DevGovernance. A battlefield network built for iteration risks treating soldiers as beta testers.

Govini’s Counteroffensive: Discipline over Demos

While Palantir’s valuation trembled, Govini’s ascended. The Arlington-based startup announced $100 million in annual recurring revenue and secured $150 million from Bain Capital. Its CEO, Tara Murphy Dougherty — herself a former Palantir executive — emphasised the company’s growth trajectory and its $900 million federal contract portfolio.

Govini’s software, Ark, is less glamorous than autonomous drones or digital fire-control systems. It maps the U.S. military’s supply chain, linking procurement, logistics and readiness. Where others promise speed, Govini preaches structure. It tracks materials, suppliers and vulnerabilities across lifecycle data — from the factory floor to the frontline.

If Anduril and Palantir forged the sword of rapid innovation, Govini is perfecting its edge. Precision, not pace, has become its competitive advantage. In a field addicted to disruption, Govini’s discipline feels almost radical.

Technical Reading: From Vulnerability to Vector

The NGC2 memo can be interpreted through a simple threat-modelling lens:

- Privilege Creep → Data Exposure — Excessive permissions allow information spillage across clearance levels.

- Third-Party Applications → Supply-Chain Compromise — External code introduces unassessed attack surfaces.

- Absent Logging → Zero Forensics — Breaches remain undetected and untraceable.

- Unverified Binaries → Persistent Backdoors — Unknown components enable long-term infiltration.

These patterns mirror civilian software ecosystems: typosquatted dependencies on npm, poisoned PyPI packages, unpatched container images. The military variant merely amplifies consequences; a compromised package here could redirect an artillery feed, not a webpage.

Modern defence systems must therefore adopt commercial best practice at military scale: Software Bills of Materials (SBOMs), continuous vulnerability correlation, maintainer-anomaly detection, and cryptographic provenance tracking.

Metadata-only validation, verifying artefacts without exposing source, is emerging as the new battlefield armour. Security must become declarative, measurable, and independent of developer promises.

Procurement and Policy: When Compliance Becomes Combat

The implications extend far beyond Anduril and Palantir. Procurement frameworks themselves require reform. For decades, contracts rewarded milestones — prototypes delivered, demos staged, systems deployed. Very few tied payment to verified security outcomes.

Future defence contracts must integrate technical evidence: SBOMs, audit trails, and automated compliance proofs. Continuous monitoring should be a contractual clause, not an afterthought. The Department of Defense’s push towards Zero Trust and CMMC v2 compliance is a start, but implementation must reach code level.

Governments cannot afford to purchase vulnerabilities wrapped in innovation rhetoric. The next generation of military contracting must buy assurance as deliberately as it buys ammunition.

Market Implications: Valuation Meets Validation

The markets reacted predictably: Palantir’s shares slid 7.5 %, while Govini’s valuation swelled with investor confidence. Yet beneath these fluctuations lies a structural shift.

Defence technology is transitioning from narrative-driven valuation to evidence-driven validation. The metric investors increasingly prize is not just recurring revenue but recurring reliability, the ability to prove resilience under audit.

Trust capital, once intangible, is becoming quantifiable. In the next wave of defence-tech funding, startups that embed assurance pipelines will attract the same enthusiasm once reserved for speed alone.

The Lessons of the Armour — Ten Principles for Digital Fortification

For practitioners like me (Old school), here are the Lessons learnt through the classic lens of Saltzer and Schroder.

| No. | Modern Principle (Defence-Tech Context) | Saltzer & Schroeder Principle | Practical Interpretation in Modern Systems |

| 1 | Command DevSecOps – Governance must be embedded, not appended. Every deployment decision is a command decision. | Economy of Mechanism | Keep security mechanisms simple, auditable, and centrally enforced across CI/CD and mission environments. |

| 2 | Segment by Mission – Separate environments and privileges by operational need. | Least Privilege | Each actor, human or machine, receives the minimum access required for the mission window. Segmentation prevents lateral movement. |

| 3 | Log or Lose – No event should be untraceable. | Complete Mediation | Every access request and data flow must be logged and verified in real time. Enforce tamper-evident telemetry to maintain operational integrity. |

| 4 | Vet Third-Party Code – Treat every dependency as a potential adversary. | Open Design | Assume no obscurity. Transparency, reproducible builds and independent review are the only assurance that supply-chain code is safe. |

| 5 | Maintain Live SBOMs – Generate provenance at build and deployment. | Separation of Privilege | Independent verification of artefacts through cryptographic attestation ensures multiple checks before code reaches production. |

| 6 | Embed Rollback Paths – Every deployment must have a controlled retreat. | Fail-Safe Defaults | When uncertainty arises, systems must default to a known-safe state. Rollback or isolation preserves mission continuity. |

| 7 | Automate Anomaly Detection – Treat telemetry as perimeter. | Least Common Mechanism | Shared services such as APIs or pipelines should minimise trust overlap. Automated detectors isolate abnormal behaviour before propagation. |

| 8 | Demand Provenance – Trust only what can be verified cryptographically. | Psychological Acceptability | Verification should be effortless for operators. Provenance and signatures must integrate naturally into existing workflow tools. |

| 9 | Audit AI – Governance must evolve with autonomy. | Separation of Privilege and Economy of Mechanism | Multiple models or oversight nodes should validate AI decisions. Explainability should enhance, not complicate, assurance. |

| 10 | Measure After Assurance – Performance metrics follow proof of security, never precede it. | Least Privilege and Fail-Safe Defaults | Prioritise verifiable assurance before optimisation. Treat security evidence as a precondition for mission performance metrics. |

The Sword and the Shield

The codebase has become the battlefield. Every unchecked commit, every unlogged transaction, carries kinetic consequence.

Anduril and Palantir forged the sword, algorithms that react faster than human cognition. But Govini, and others of its kind, remind us that the shield matters as much as the blade. In warfare, resilience is victory’s quiet architect.

The lesson is not that speed is dangerous, but that speed divorced from verification is indistinguishable from recklessness. The future of defence technology belongs to those who master both: the velocity to innovate and the discipline to ensure that innovation survives contact with reality.

In this new theatre of code and command, it is not the flash of the sword that defines power — it is the assurance of the armour that bears it.

References & Further Reading

- Mike Stone, Reuters (3 Oct 2025) — “Anduril and Palantir battlefield communication system ‘very high risk,’ US Army memo says.”

- Samantha Subin, CNBC (10 Oct 2025) — “Govini hits $100 M in annual recurring revenue with Bain Capital investment.”

- NIST SP 800-218: Secure Software Development Framework (SSDF).

- U.S. DoD Zero-Trust Strategy (2024).

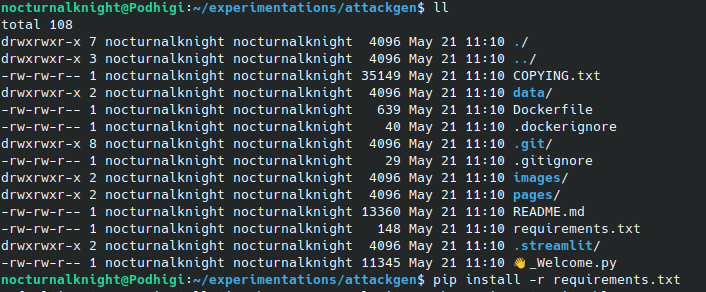

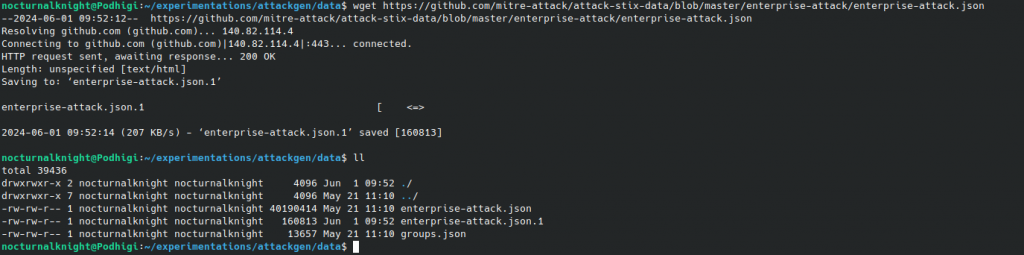

- MITRE ATT&CK for Defence Systems.